These are interesting times. And slightly scary times too. “The robots are coming for us!”

As a creative studio, we’ve always felt we should look to embrace these kinds of changes. AI will take away some of the work we do, but it will also help to improve some of our production workflow.

When looking into this world, it can be overwhelming. The amount of AI tools now available, and the speed at which new ones are arriving, it’s easy to get lost in endless possibilities and new discoveries; both with tools to improve your workflow and those for generating new ideas. With this in mind we wanted to set ourselves a challenge and take on a project that allowed us to explore, and do some R&D on what is being offered with these new motion capture and AI tools.

As we’ve worked with motion capture on large rigs before, our goal now was to look into motion capture and how the advancement in AI has enabled this practice to be achievable on a smaller scale with just the use of a mobile phone.

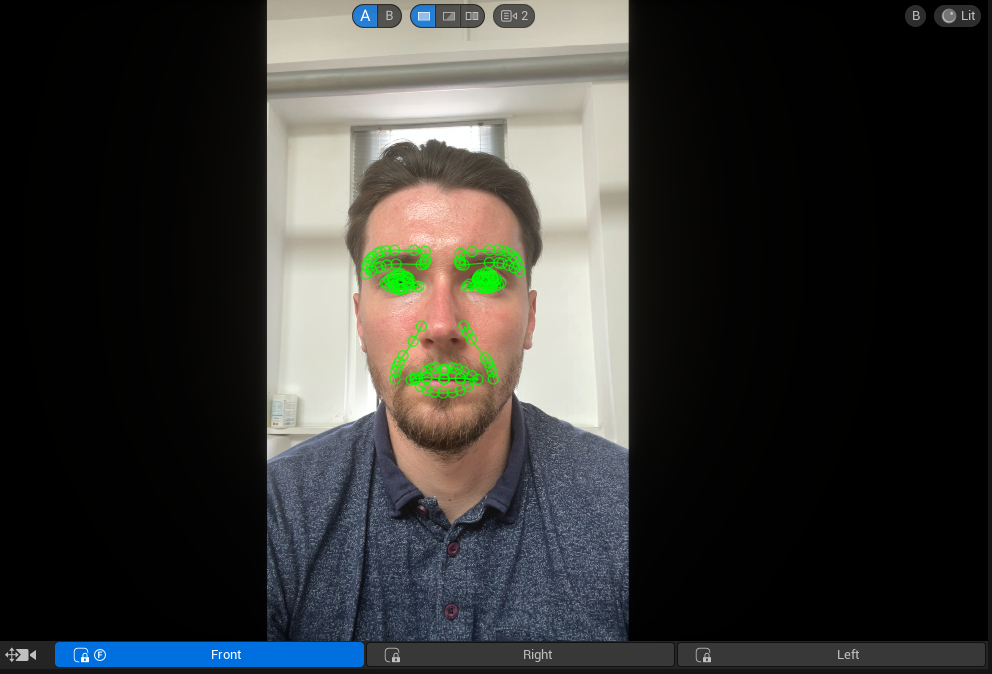

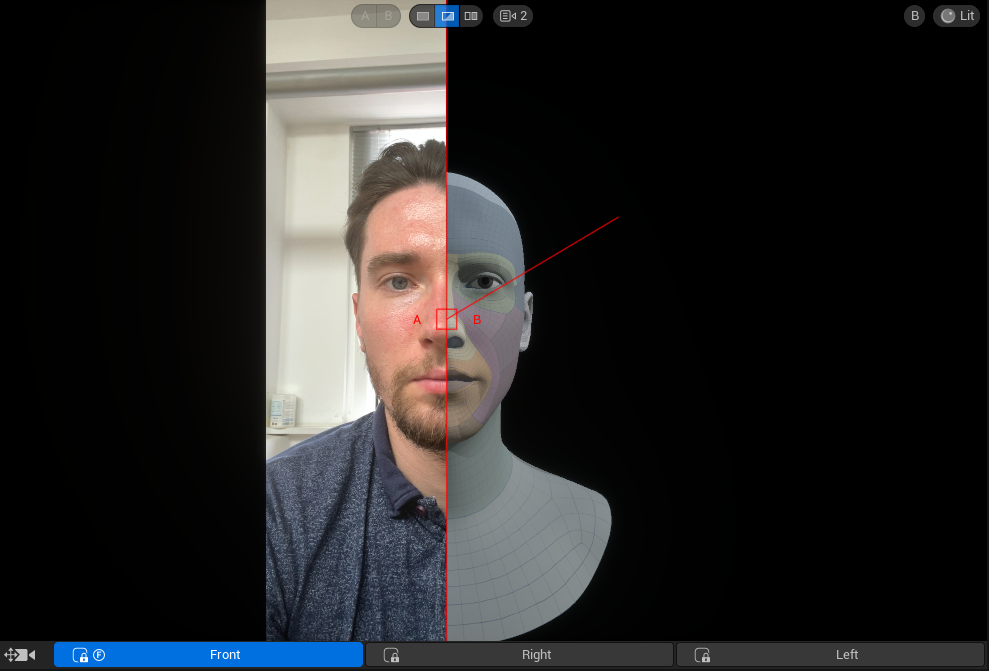

MetaHuman is a plugin for Unreal Engine that allows performance capture via the Live Face Link App directly from an iPhone 12 (you’ll need an iPhone 12 or higher). They are the most accurate in facial animation using highly detailed and realistic 3D digital characters that are integrated into Unreal Engine projects. They leverage advanced technologies such as digital human modeling, facial animation, and high-fidelity rendering to achieve a level of realism that was previously more challenging to attain.

We did explore a couple of other options before opting for MetaHuman but from the initial phone tests it was clear how powerful this was. The realism is definitely a game changer for realistic face captures and MetaHuman is really good at tracking facial expressions and translating them into an animation.

We sculpted a cartoon style character with the idea to turn it into metahuman and apply facial animations. After a few attempts, we got the one that worked just fine. With minor tweaks, especially in the eye area, we got our character turned into a metahuman. It was time to apply the animations. First we tested facial animations. The next steps we’re adding some body animation to it, to make it feel more alive.

MetaHuman worked well for facial capture but didn’t allow for a full body capture via a phone. For the purpose of our R&D tests we wanted to keep things small scale, so we needed to try out some other types of motion capture tools that could demonstrate this. We wanted to feed in footage of a person acting so the two softwares we tried out were Move.ai and Rokoko.The multi-camera set up on Move.ai edged it as it helps capture more accurate data. A plus side to using these plugins is that they could be used in other software outside of Unreal Engine, into other workflows that we use.

Most mo-cap software uses a combination of advanced AI, computer vision, biomechanics, and physics to track multiple points in the human body, especially footage without any markers. First tests were simple idle animations to see how well it works. Once this was established we moved to trying the technique on custom animations and characters.

Below you can see the outcome of a more elaborate test. Once the video was captured we cleaned all the animation in iClone and exported it out. As you can see, the character is a bit wobbly/drunk at this stage, but the angles and level of detail we’re getting from a basic setup of phone footage shot in an office, is quite staggering. Once we can get this into a studio, with more control and light, we’ll be able to use this method on our character animations. A bit of research time well spent.